These days, as a photographer, one strives constantly to not become a data center administrator. What with endless upgrades, updates, revisions, patches, security alerts, subscription management, feed management and the like, it’s a wonder that we have any time left to actually take and create photographs. Consequently, subjects such as backing up one’s data usually fall to the bottom of the list in terms of priorities. Then, ensuring the integrity of the data is even farther down the list of things to do. As usual, one day we get the dreaded "can’t read Drive X", only to throw up our hands in despair.

The catch with data backup is not that it’s a boring subject (it is), but that there are a huge number of options and strategies one can pursue to achieve a desired end. What is that end precisely ? How should I go about achieving it ? What will it cost ? This article will attempt to address a few of these issues.

______________________________________________________________

The Storage Dilemmas – Size, Speed, Integrity, Cost:

Size – Face it, digital files get larger, not smaller every year. What with increasing sensor sizes, this trend shows no sign of abating. In my case, I shoot a PhaseOne P45+, every tif file is 224.6MB in size. I recently returned from Antarctica with 3000+ files, each 224.6MB if I converted them from RAW. A working pro I know closely shoots 3GB *per week* of keeper job images. It’s obvious we need large, inexpensive storage. 2TB SATA drives are now $299 MSRP and can easily be assembled in to inexpensive arrays, more on this in a second.

Speed –

If I have to move say 1TB of data, I want it to move rapidly. Moving it over most networks is slow and time consuming. Sneaker net, namely moving a physical disk with a copy on it makes a lot of sense in this respect.

Integrity –

Is the backup any good, how do I know if it is damaged until I need to use it ?

Cost –

All problems can be solved with enough money ? Not in this case, some very expensive and elaborate systems no longer suit the needs of photographers.

In this article I will describe a RAID system I consider reliable, backed up to Amazon’s S3 infrastructure continuously. This approach works unobtrusively, reliably and quietly.

______________________________________________________________

The Technical Landscape for Data Storage:

Over the last 15-20 years, various strategies for increasing data reliability have emerged, with RAID (Redundant Array of Inexpensive Disks) coming from UC Berkeley as the clear winner. What RAID does is write the data to disk with a calculated data protection value, which is usually placed on separate drive. If the data is damaged, one can reconstruct it from that protection value and other data. There are several variations of RAID implementations, which allow mirroring (copy), striping (speed oriented), parity (protection) and combinations of the like. For you technical types, RAID 0,1,5,5EE, 6, 10, 5+0 and many other flavors are all around. I will concentrate on RAID 5 and 6, which provide differing levels of data protection.Here’s a good explanation.

A RAID implementation can be in hardware or software, having your Mac perform RAID on its disks is a software example, having a controller in your computer do the RAID is a hardware example.

As mentioned before, the data protection in RAID 5 occurs because a ‘check’ number is calculated as the data is written and is then stored on a separate disk, if the original data is damaged, the missing or damaged data can be mathematically reconstructed. This is known as a ‘rebuild’. The down side of this is that the system can tolerate losing only one drive, not two, and a rebuild can take a long, long time for a large data set. We recently rebuilt a large array at a television studio, with about 12TB of data, it took a week to rebuild, the entire system was down until that operation completed. Various flavors of RAID controllers can do a rebuild in the background, but the plain fact is that something is going to take anywhere from hours to days to do it. One note on RAID, having participated in creating a RAID software controller long ago, it takes about 2 years of operation to really get the bugs out in every case, so stay away from young implementations and most of all, stay away from any "it’s a proprietary implementation that’s better than RAID, including our secret sauce". You don’t want to be stuck with some bizarre failure that no one can understand or fix.

If you lose two drives you are out of luck, so RAID 5EE, 5+0, 6 and other fancy implementations evolved to not only allocate multiple spare drives to endure multiple drive failures but also to mathematically increase the protection calculations across the data. A RAID 6 is an array with the RAID 5 parity blocks distributed across all the drives in the array, so you can lose more than one drive and survive (hopefully). One can go on endlessly, copying copies of copies, hierarchies of copies and so on as long as your budget holds out ! There are plusses and minuses to each approach, searching on the net leads to many interesting (non Computer Science folk – boredom warning !) discussions of them.

At the same time as RAID technology evolved, so did the networks to connect the drives to the computer. In particular, Storage Area Networks (SAN’s) evolved, with Fiber Channel being the leading choice. Unlike 3 years ago, one can purchase a FiberChannel interface card for a Mac for less than $200. Without getting in to network fundamentals, FC is optimized for transferring blocks of data at high speed, namely 3Gb/s. One can choose to buy a small FC switch, if connecting to multiple computers, however this is NOT necessary to get started.

What no one saw however, was the move to services in the network which could provide high levels of data security, at low cost. In particular, Amazon’s Scalable Storage Service (S3) arrived, allowing one to use Amazon’s capacity for your data, at a low price (currently $0.15/GB/Mo. plus transfer charge). Why not back your RAID array up to Amazon Web Services ?

______________________________________________________________

Some Facts:

MTBF or failure rates quoted by disk drive manufacturers are statistical projections of reliability. As with any population, outliers exist on both sides of the reliability curve. You don’t want to be on the short side of the curve, however. Moreover, studies have shown that manufacturers greatly overestimate reliability figures. I’d would highly recommend readingthe paper by the Google Labsfolks on disk reliability, they studied 100,000 drives at Google and found some surprising results. Among them: temperature and usage patterns had little effect upon failure rates, and once a drive error had been noted by diagnostics (SMART) the failure rate was hugely increased (10X), also that the predictive capabilities of diagnostics such as SMART was almost nil. Also, other researchers have shown that the extra money paid for differing drive types or interfaces (SAS, SATA, Enterprise FC) has little bearing on failure rates. Bottom line ? Use the cheapest name brand drive you can and expect failures. Plan for them accordingly.

______________________________________________________________

One Approach.

Please note that I am not endorsing any manufacturer, merely here’s what works for me after many iterations and much pain, here’s the set up:

1) RAID Array – Promise Vtrak VTE610fd SATA/SAS FiberChannel RAID 6 box, with 16 slots for drives. Dual Fiber Channel (FC) controllers, so that if one controller goes south, we are still operating, dual power supplies and so on. I buy it empty and stuff it with SATA drives, typically 1.5TB drives. Full up, this thing costs about $7-8K, fully loaded. It implements RAID 6, or RAID 5 with multiple distributed parity blocks, so it can take a two drive failure and survive. You can choose numerous RAID configurations from within the management application, which is Web based. Currently we use Seagate Drives, but only because we get a great price on them. I allocate two drives to hot spares, so the box can automatically "fix" a dead drive. With RAID overhead, effectively the system loses about 15%-18% of it’s capacity to protection data, but that’s not onerous. Note, there’s a difference in speed and sometimes functionality between a dedicated RAID box that has specially designed controllers and software, rather than running a software program which implements a RAID scheme on your computer. Choose accordingly.

2) FC card for computers – about $200, PCI Express. Various manufacturers.

3) Amazon S3 (Scalable Storage Service), unlimited encrypted storage in "the cloud", the price list ishere, for me it costs $0.15/GB/mo, and $0.10/GB for data moved in and $0.15/GB for data moved out of the service. Amazon just launched their Import/Export service, whereby one can mail them a disk or USB device with your files and they will load it into the ‘cloud’ for you, if you want to initially load a huge set of data. For those of you unfamiliar with Amazon Web Services, Amazon has built an entire range of diverse web service functions which are made available on their global computer infrastructure, pay as you go, accessible from the net, infinitely scalable, including things like running virtual ‘machines’, disk storage, compute clouds, and the like. Hugely popular, many of your favorite web sites are probably really an instance of a virtual machine, storage, load balancing and so forth made up from components in theAmazon cloud.

To be fair, many others are in this game too, such as Rackspace, with the titans, such as Microsoft, Sun+Oracle (Snorcle), and many startups racing to enter the field.

The bottom line is that we are at a point now where enough applications have been written to allow normal humans to avail themselves of various aspects of these services.

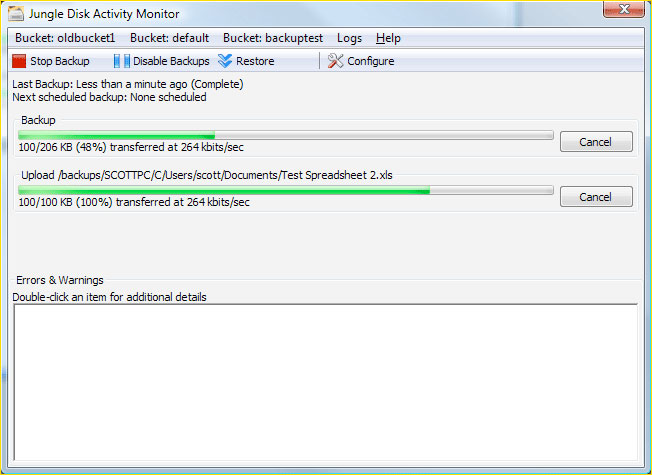

4) Jungle Disk – This application runs a scheduled backup on a specified folder, via the Amazon Scalable Storage Service, it can resume a partially downloaded file at any time, for $2/mo, plus the Amazon cost. Note, the files are encrypted with your encryption key, only you can read them, to Amazon they are a blob of spinning random bits. Plus, it can download only changed disk blocks, meaning that it will look at the changes made to a disk file and only download the changed bits. Note: in order to have some semblance of speed, it caches a copy of your files locally, this means you have 2x your local storage needs, but only 1x goes to Amazon. Some find this objectionable, I don’t.

5) Management – I manage the RAID array from it’s Web interface, once set up it needs little attention, however I have it email me whenever it notices anything amiss. Jungle Disk also allows me to use things like CronoSync, SuperDuper and other simple applications to write to the S3 service, so I can use familiar tools. For those of you who want the ultimate easy interface, look at the popular Firefox browser extension "S3Fox", this allows you to drag and drop files straight in to S3 from your browser.

The other tools I use are for verification, it’s critically important to make sure that what you wrote to disk, either locally on your RAID box or remotely to Amazon are valid. Numerous utilities can do a binary comparison, or calculate a checksum or the like and tell you if things don’t match. There have been many, many cases in the IT industry of someone restoring a backup only to find that the backup was defective. "Oops!" is a bad thing to hear when restoring valuable data. Validating up front is cheap insurance. Even the hallowed WinZip program can compute a checksum with which you can compare previous file versions. Try zipping your files in to a few large blobs, now shove them on to the storage device. It’s easy to test them for reliability.

______________________________________________________________

The Downside:

I can hear you all saying, "but I have 200 GB of data and only a 1.5Mb/s DSL line". An observation is that 80% of most computer’s CPU cycles are wasted, with probably <10% of the network utilized on a good day, so set up the backup to occur at night, wait a week or two and you’ll be set. From then on, send only what’s changed. Alternatively, look at Amazon’s Import/Export service, you send them your physical disk or USB device and they load it in to your personal storage bucket. They’ll send you a disk with your files for restoration, in case of total disaster. Please note this service is in beta test and will shortly become available to all.

Second downside – you lose all your data and need all 200GB downloaded, right now. At 1.5Mb/s (approx 187KB/s) this is about 300 hours of download, or about 12 days straight. This is why we keep a RAID system locally, the Amazon backup is "worst case". Also, do you really need all those files "right now?" However, with the recent disk and USB key Import/Export service unveiling from Amazon, ring them and ask for them to send you a disk with your data. FedEx/DHL is a wonderful thing.

______________________________________________________________

Conclusions

We are in the age of "Cloud Computing", ultra large inexpensive hard drives, widely available broadband connectivity and easy to use software. Can you really justify not backing up your irreplaceable photographs via some mechanism. Why not let someone who runs a global data center maintain your files on their professionally run systems for you ?

______________________________________________________________

About Geoff Baehr

Geoff was the former Chief Technology Officer – Networking, for Sun Microsystems, and Director of Systems Engineering, for TRW Information Networks. He holds thirteen US Patents in computer engineering, network security and spread spectrum radio design. He currently is a partner in US Venture Partners, a $3B venture firm, and is a technical advisor to both Microsoft and Sun Microsystems.

He does landscape photography with a Phase One P45 and Linhof M679cs.

The rest of his CV would fill another two screens and simply serve to depress you. He’s also a really nice guy. – Michael

October, 2009

You May Also Enjoy...

Eastern Sierras

In late October '99 I spent 5 days shooting in the Eastern Sierra. Traveling for the full trip with Southwest photographerSteve Kossackand film Director /